As companies race to automate, AI agent deployment now becomes a source of competitive edge. According to Gartner’s predictions for 2026, above 70% of enterprises will deploy AI agents to streamline their major operations and automate decision-making.

However, deploying AI agents at a large scale isn’t all about just plug-and-play. From model bias and fairness issues to data governance gaps and poor rollout strategy, many risks and ethical challenges halt businesses.

In this article, we’ll unpack the most common ethical pitfalls in AI model deployment that can hurt their reliability. We also walk you through how businesses can avoid repeating such mistakes and deploy AI agents responsibly, without compromising trust.

What Does It Mean to Deploy AI Agents?

To deploy AI agents means integrating AI into business systems. These agents are software that can remember tasks, set goals, and take actions without human input. Deployment happens when you move them from testing into tools people use, like Slack, CRM solutions, or apps, to automate customer support tickets or sales follow-ups.

Deployment is different from just “using AI since in this scenario, the system runs in production, makes decisions, and interacts with people or systems at scale. A PwC survey reports that 66% of companies with AI agent deployment witness measurable productivity gains.

Where Can You Deploy AI Agents?

You can deploy AI agents on cloud platforms, SaaS tools, or through APIs. These agents support and easily integrate into AI chatbots, customer support apps, internal workflows, and even decision-making tools.

Cloud and API Deployments

AI agents run smoothly in the cloud using platforms like Google Vertex AI or Amazon Bedrock. According to Fortnite, over 60% of companies use cloud-based AI to power half of their operations.

APIs make it easy to drop AI into any product in healthcare, logistics, or e-commerce. For example, developers can use OpenAI’s API to automate lead scoring or summarize emails.

2. SaaS Tools and Workflows

Software as a service applications like Slack, Notion, and Discord support AI agents for clerical tasks like automating updates, flagging action items and answering FAQs. With tools like Zapier and Make, non-developers design smart flows that convert form data into leads or trigger support tickets.

3. On-Demand and Logistics Deployment

In on-demand industries like delivery, rideshare, and logistics, AI agents handle routing, scheduling, or inventory triggers. DHL claims AI-driven logistics helped cut delivery time by 25%. From Uber’s dispatch system to Amazon’s warehouse bots, reliable automotive app development leverages AI logic to reduce downtime, predict supply needs, and optimize route efficiency.

Want to deploy AI agents in your app, site, or workflow?

GPT-based agents for your business use.

Schedule Your Free Session Today.What are the Three Phases for AI Deployment?

To deploy AI agents is not a one-click job. Its a strategic process moves through three phases that shape how AI agent deployment strategies work and perform.

1st Phase: Design & Data Collection

You define the agent’s goals and collect the ethical, diverse data. At this phase, most AI solutions fall under the category of ANI (Artificial Narrow Intelligence), which means they support one task only, like chatbots or spam filters. Tools like Vertex AI Agent Builder or MindStudio help speed up the designing & data collection for AI deployment.

2nd Phase: Training & Testing

In the next phase of AGI (Artificial General Intelligence), AI interprets and learns from the data to handle a wide range of tasks like a human. Once trained, it gets stress-tested for errors, bias, or changes in performance.

3rd Phase: Deployment & Monitoring

The agent goes live inside apps, chatbots, or Slack. You monitor KPIs, catch reliability dips, and run fairness audits. Tools like MindStudio AI handle real-time diagnostics and tweaks. Future-ready companies think ahead to ASI (Artificial Super Intelligence), where AI could outperform humans entirely.

What Are The Main Challenges in Deploying AI Models?

To deploy AI agents sounds easy on paper. In practice, it’s a different game. The key challenge in deploying AI agents is to manage a large volume of data gathered from multiple sources while ensuring scalability and compliance simultaneously.

1. Data Overload and Messiness

AI models need enormous amounts of data from apps, CRM platforms, sensors, spreadsheets, PDFs, and even emails which usually comes in rough formats without any structure. Arranging, labeling, and integrating it into pipelines is one of the biggest challenges in AI deployment. If the input is inconsistent, the model fails. Gartner reports 85% of AI projects fail due to poor data quality.

2. Model Drift and Reliability Gaps

AI results don’t remain accurate forever. Over time, conditions shift, so the model starts making worse predictions, called drift. Teams need constant retraining and performance monitoring or risk deploying models that silently lose value.

3. Data Privacy and Compliance

Most of the time, AI uses personal or sensitive data. In cases involving handling healthcare data (HIPAA) or European user info (GDPR), compliance isn’t optional, as a slight violation can trigger audits or fines, not to mention public trust issues.

4. Scaling and Infrastructure strain

AI workloads spike compute demand fast. Running models on large volumes of data, especially generative AI solutions or multi-agent systems, can get expensive. API rate limits, latency issues, or cost overruns are common without the right infrastructure.

5. Integration with legacy systems

Most business operations are not started from scratch because of outdated tools and workflows. Plugging AI into these systems means rewriting connectors, migrating data, or rebuilding APIs, which is not only time-consuming but also fragile.

6. Traction of Post-Deployment Behavior

AI agents are continuously maturing once deployed. They can generate unexpected outputs, especially in edge cases. So, you need dashboards, alerts, and human-in-the-loop checks to prevent silent errors or compliance issues. According to a Deloitte survey, 62% of US executives cite integration and monitoring as their biggest AI roadblocks.

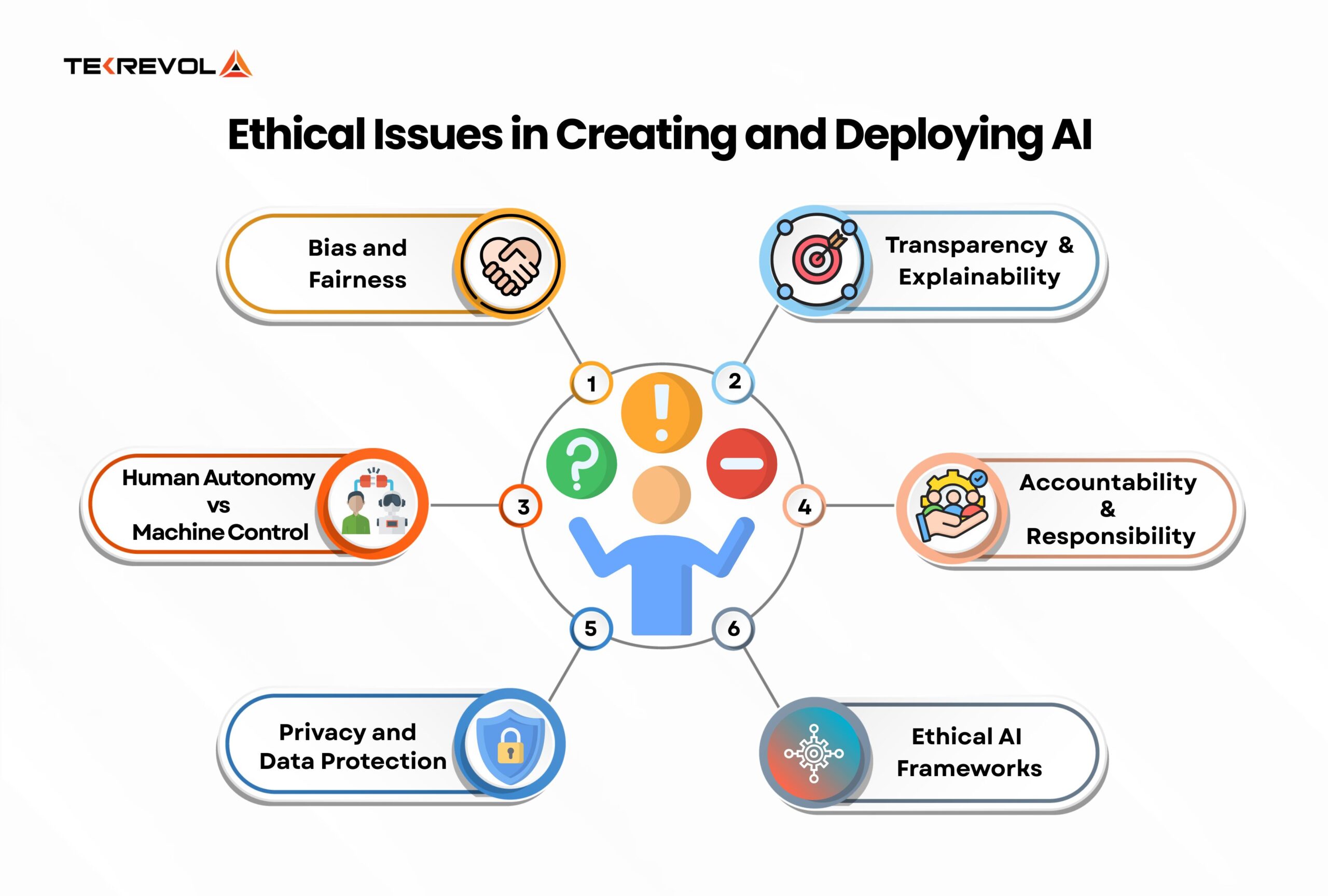

What are the Ethical Issues in Creating and Deploying an AI System?

Bias, lack of transparency, privacy violations, overreliance on automation, and unclear accountability are a few of the primary ethical issues in real-time AI agent deployment.

No doubt, AI systems can make life easier, but they can also put you in great danger if built without ethical guardrails. Let’s break down the ethical challenges every company must confront to deploy AI Agents.

1. Bias and Fairness

If your AI system learns from biased data, it’ll produce biased results. A loan approval model keeps rejecting applicants from certain zip codes because of historical bias. That’s not a glitch but a serious ethical failure.

2. Transparency and Explainability

Many AI models (especially deep learning ones) work like black boxes. They automate or speed up the processes, but we can’t fully comprehend how. Without transparency, trust breaks down fast, for example: if an AI system denies queries like someone’s healthcare or parole, people deserve to know why.

3. Human Autonomy vs. Machine Control

Human autonomy matters too. AI’s job is to assist, not replace human judgment, especially in healthcare, criminal justice, and finance, where human insight is irreplaceable. But when systems start operating on their own, like in predictive policing or autonomous weapons, we lose human control, which is a dangerous trade-off.

4. Accountability and Responsibility

Experts push for clear accountability. AI isn’t exempt from consequences. When an AI fails, developers, companies, and users all share responsibility for AI behavior.

5. Privacy and Data Protection

AI works only if it has massive data on the backend. However, the collection and processing of that data without clear consent breaks trust and may violate regulations like GDPR or HIPAA.

6. Need for Ethical AI Frameworks

Guidelines from organizations like IEEE or the EU Commission urge developers to embed ethics into every stage of the AI lifecycle. Designing ethical AI isn’t just good practice but a business need as trust matters, and unethical systems don’t scale.

AI deployment without ethics invites breakdowns.

TekRevol designs agents with built-in safeguards, accountability, and user trust.

Get your Free AI Compliance ReviewWhat Is a Challenge Associated With Bias in AI Systems?

Bias in AI systems comes from skewed training data and leads to unfair decisions. Even “neutral” datasets often reflect past discrimination. This impacts real outcomes in hiring, loans, and public safety.

| Type of Bias | Meaning | Example |

| Historical Bias | Data reflects past discrimination | Loan approvals favor wealthy ZIP codes |

| Sampling Bias | The dataset excludes key groups | Facial recognition struggles with dark-skinned faces |

| Measurement Bias | Data collected or labeled inaccurately | Health data underestimates pain symptoms in women |

| Aggregation Bias | One model used for diverse users | Job ad algorithm favors male applicants |

| Confirmation Bias | Model learns to reinforce existing stereotypes | Predictive policing sends more patrols to poor areas |

How to Handle Bias in AI Systems

To reduce bias in AI systems, run bias checks early, not after things go live. If your data skews one way, use synthetic samples to even it out. And when it comes to serious calls like loans or hiring, keep a human in the room.

Bias Audit & Fairness Tools

| Tool | Use Case | Highlights |

| AI Fairness 360 (IBM) | Detect and fix bias in datasets & models | Open-source, supports Python, broad metrics |

| Fairlearn (Microsoft) | Evaluate model fairness | Integrates with scikit-learn |

| What-If Tool (Google) | Visualize model decisions | Great for model debugging & edge case checks |

| Z-Inspection | Ethical AI risk assessment | Human-centered audit for sensitive use cases |

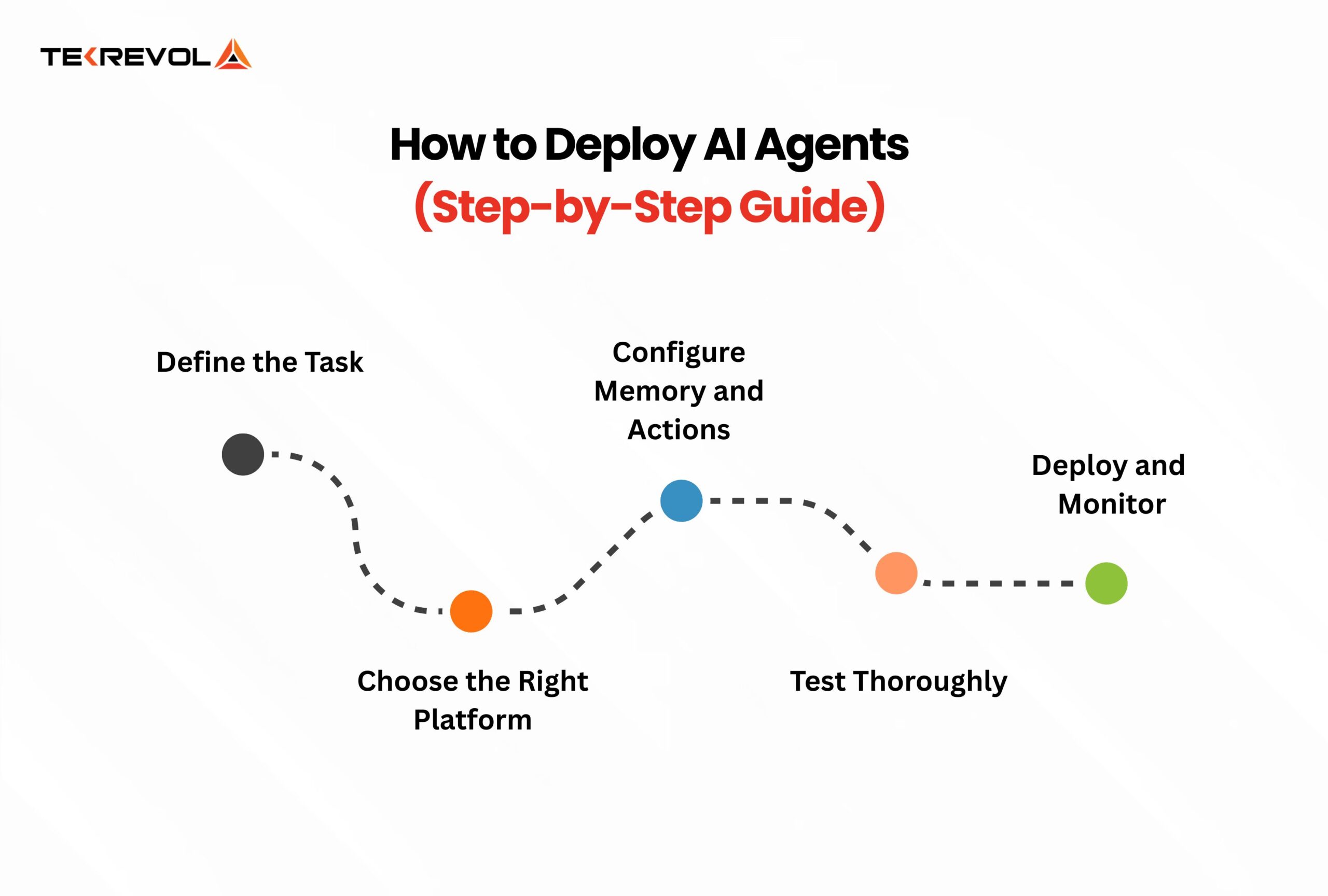

How to Deploy AI Agents in Business? Step-by-Step Process

The real-time AI agent deployment involves telling the agent what it is supposed to do, like answering support tickets or summarizing reports. Then you pick the tools, wire it up, and get it live. Here’s how to deploy AI agents:

1. Define the Task

Plan out what you want the AI agent to do and in which operational areas. Whether using it to answer customer questions or manage schedules, clarity while integrating AI into business systems sets you up for success.

2. Select the Right Platform

Pick a platform that fits your tech skills and needs. Developers often go with OpenAI or Google Vertex AI, while no-code AI tools like MindStudio or Make.com are catching on fast

Gartner reports that by 2026, over 80% of enterprises will use no-code or low-code AI tools to speed deployment.

3. Configure Memory and Actions

Next, decide what info your agent needs to remember and what actions it can perform, like sending emails or pulling data from your CRM. Proper setup here keeps the agent useful and relevant.

4. Test Thoroughly

Then, run the agent through real-world scenarios to find bugs or gaps to adjust responses and flows until it feels reliable.

5. Deploy and Monitor

Finally, the last step is to connect your AI agent to your live systems, like Slack or your website. Once done, regularly track how it’s doing and make tweaks as needed.

Why TekRevol Is Your Trusted Partner to Deploy AI Agents

Building an AI agent is one thing, and deploying it securely, ethically, and at scale is another. That’s where TekRevol steps in. As a leading AI agent development company, we bring deep expertise across GPT-based systems, no-code platforms, and fully custom AI agent deployment strategies.

Our team builds with ethics in mind, prioritizing bias checks, user privacy, and transparent AI behavior. We’ve helped startups and enterprises in integrating AI into business systems that perform under pressure relentlessly and stay compliant.

Need help to deploy AI agents for your team?

TekRevol handles everything from agent logic to live integration.

Start Your Free Call Now.